Can we imagine an AI artist committing self-mutilation in grief as the painter Van Gogh once cut his ear? Hardly. Artificial intelligence is becoming more prevalent in many areas of life and will only intensify in the near future. While there is mostly a consensus from a scientific, technological point of view that these changes have an overall positive, fertile effect on human well-being, the perception of AI in the arts is mixed.

The relationship between artificial intelligence and art can be traced back to the 1980s. It started with musical pieces created with AI contribution, followed by literature, film and the fine arts – the AI-based painting by the Obvious Art group sold for $ 432,500 at Christie’s auction instead of the $ 7-10,000 previously expected.

As long as artificial intelligence does not require spectacular physical incarnation to paint, acquire music, or compose text, this is inconceivable in the case of dances, productions expressing art through movement. Moreover, the possibility of a live performance makes the question even more exciting – can a dance performed by a robot be as creative as a human performance that carries the probability of chance and improvisation?

The most famous piece of robot dance is a choreography by Boston Dynamics, a company well known for their running-bouncing human and dog-shaped robots. They used a song by the South Korean boy band, BTS. Referring to the seven members of the band, seven robot dogs perform the production. The performance is spectacular, dynamic, the angular movement of the robots is not disturbing in the dance music environment, the adapted, transformed forms of movement are imaginative and lifelike.

Professional ballet dancer and quantum physicist Merritt Moore has been researching the connections between her two “professions” for a decade, and under the Covid disease, in the absence of a partner, began working with robot from Universal Robots which originally performed automation and manufacturing tasks. Teaching the machine to dance, rehearsals, and the performance brought a lot of insight to Moore about human movement. The finished production, on the other hand, is rather interesting, the dance of the industrial robot does not fascinate the viewer even though its dancing partner does everything to make the performance enjoyable.

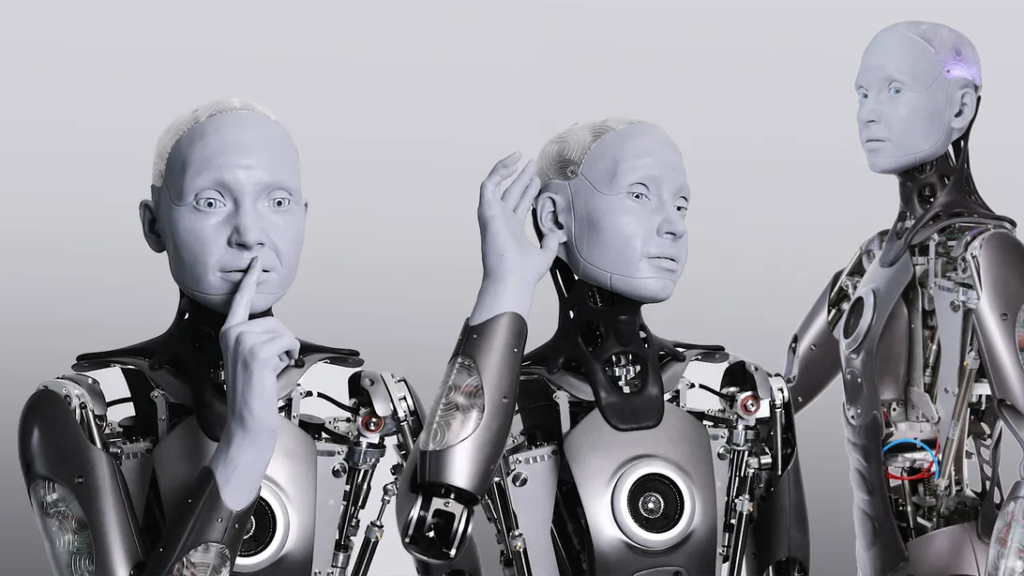

The imaging of the movements of the human body is progressing despite the bumps, the experiments in the field of speech skills, NLU, NLP are spectacular (we ourselves do similar research), but facial expressions are also important to the real performing arts. This year’s robot sensation is Ameca.

The humanoid creature responds with shockingly lifelike facial expressions in a typical situation. It wonders, grieves, wakes up sleepily. Those who interact with the robot see Ameca as either a man or a woman based on their temperament, upbringing, or, of course, their own lived gender. The lifelike face and facial expressions, on the other hand, were intended by the manufacturers to be gender neutral and cannot be linked to either the male or female gender. If Ameca is neither woman nor man, it can be said that a whole new approach to imitating human facial expressions has emerged.

There doesn’t seem to be that far from a Shakespeare drama performed by robots.

But the question remains, despite progress: Will the performance really be enjoyed? Will the perfect reproduction of human movement and thinking not become poorer, will it not lack creativity? What mathematical model will be suitable to describe this, since such a work did not exist before, the AI cannot predict whether the resulting work is aesthetic or harmonious? Is it possible to speak of the originality that forms the essence of art in the event that a work is created on the basis of hundreds of relevant data from existing works of art, sound sequences, images, texts, and forms of movement? Who will be the celebrated star: the robot, or the creative person still behind it, or the owner of the technology or the algorithm?